In 2002, I was seconded to the University of the South Pacific for a few weeks to teach Internet programming to students and staff. At the time, Fiji’s Internet connection was a rather inadequate satellite link – you could literally greet the incoming bits individually. The Southern Cross Cable had just been laid, but politics had it that there was no connection to. So I put up with hitting “Get Mail” on my laptop as I got into the office and, come lunchtime a few hours later, have at least some of my e-mails. I later got to experience the joys of satellite Internet in the Pacific in Tonga and Samoa, where nations with populations into the hundreds of thousands had to share satellite links with at best a couple of dozen Mbit/s capacity.

In 2011, ‘Etuate Cocker started a PhD with me. As a Tongan, he had a personal interest in documenting and improving connectivity in the Pacific. Together (and with the help of a lot of colleagues literally around the world), we built the IIBEX beacon network, which documented rather clearly the quality of connectivity available in satellite-connected places such as (then) Tonga, Niue, Tuvalu or the Cook Islands. We were able to document the improvements seen when islands connected to fibre or upgraded their satellite connection. Still, it became clear that a fibre connection would likely stay literally out of reach for a number of islands, where small population / GDP and remoteness conspire against any business case.

At around the same time ‘Etuate started, I became aware of the work by Muriel Médard and colleagues on network coding and TCP. This seemed like a way of dealing with at least some of the packet loss on satellite links, and so we conferred with Muriel: The idea of network coding the Pacific was born. Maybe we could do something to add value to satellite links. Muriel linked us with Steinwurf, a Danish company she had co-founded with Frank Fitzek – they had the software we needed (well, more or less).

Trying it out on site

With the help of PICISOC, ISIF Asia and Internet NZ, we went out to four islands in the Pacific: Rarotonga (Cook Islands), Niue, Funafuti (Tuvalu), and eventually to Aitutaki (Cook Islands) to deploy network coding gear (mainly consisting of an encoder/decoder machine, and in Aitutaki and Rarotonga also of a small network with two Intel NUCs each). Our respective local partners were Telecom Cook Islands (now Bluesky), Internet Niue and Tuvalu Telecom.

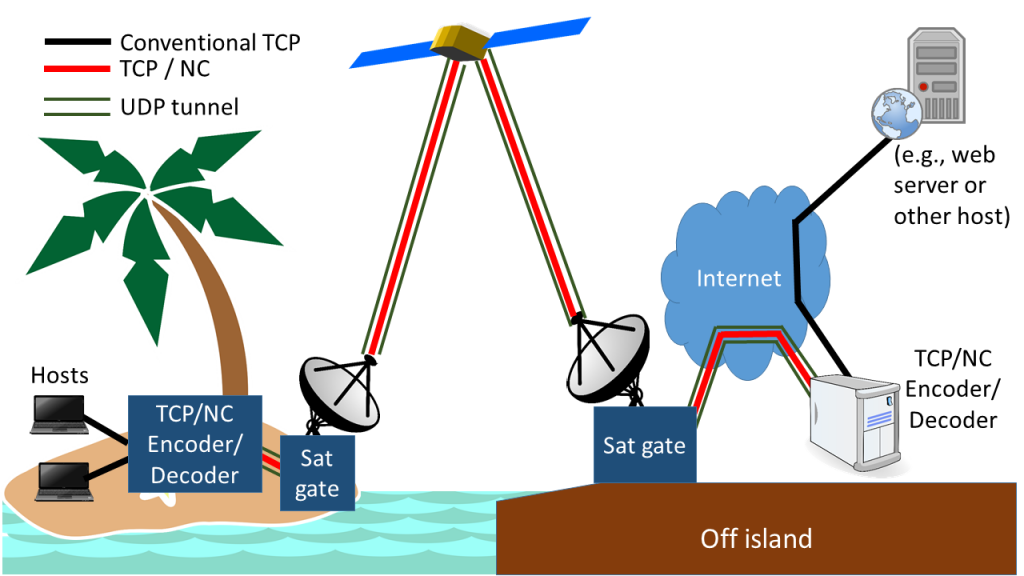

Off-island, we have an encoder/decoder at the University of Auckland and also at the San Diego Supercomputer Centre (SDSC).

Péter Vingelmann from Steinwurf chipped in and not only provided us with exactly the software we needed, but also accompanied us via Skype during the deployments. He works remotely from Hungary, which for him meant absolutely ungodly hours! The software we now use encodes any IP packet arriving at the encoder destined for a host on the other side of the link. More specifically, it takes a number of incoming IP packets and replaces them with a larger number of coded UDP packets, which are then sent to the decoder on the other side of the link, which recovers the original IP packets. So we really code everything: ICMP, UDP, TCP – but it is the TCP where coding really makes a difference.

One of the first insights was that no site is quite like the other: Rarotonga and Aitutaki are both supplied by O3b medium earth orbit satellites. In 2015, neither island quite managed to utilise all of its respective satellite bandwidth. In 2015, Aitutaki had just recently upgraded to O3b and “inofficially” had more bandwidth available than we assumed – thanks probably due to O3b quietly throwing spare bandwidth at the link. Its local access network was awaiting a variety of upgrades, so there simply wasn’t enough local demand on the link. Being on a Telecom Wifi hotspot, I had one of the best Internet connections I’ve ever had in the Pacific. Lucky Aitutaki! Network coding offered no gain here – the problem it solves simply didn’t exist here.

The Aitutaki satellite ground station. The big dish at the back is the old geostationary link, the two smaller dishes at the front track the O3b MEO satellites for the new link.

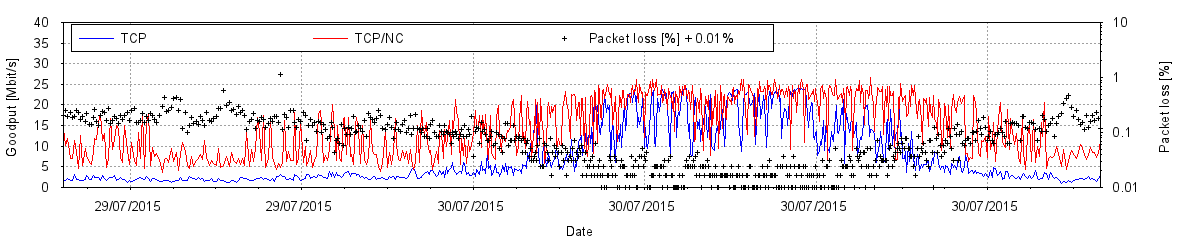

In the case of Rarotonga, which we had visited earlier, it had been an altogether different story: The link there had more bandwidth, but the demand was also much higher, causing severe TCP queue oscillation at peak times (see my APNIC technical blog on queue oscillation or watch me talk about queue oscillation on the APNIC YouTube feed with some cool graphics from APNIC thrown in). How could we tell? Quite simple: Packet loss increases during peak hours, but link utilisation stays capped well below capacity. That happens when the input queue to the satellite link overflows some of the time but clears at others. Here, the network coding gave us significant gains, especially after Péter made the overhead adaptive. With coding, peak time downloads were typically at least twice as fast – and sometimes much more than that.

If its packets are coded, TCP achieves much faster rates because a moderate degree of packet loss is hidden from the sender

In Niue, which was on 8 Mbps inbound geostationary capacity at the time, packet loss was significant, but link utilisation was high, meaning that short TCP flows kept the input queue to the satellite link well filled. In scenarios such as these, coding TCP can give individual connections a leg up over conventional TCP. This was the case in Niue as well. However, as practically all of the traffic represents goodput, this comes at the expense of conventional TCP connections, and so becomes a question of protocol fairness. Internet Niue subsequently doubled its inbound capacity, and now looks more like Aitutaki when left alone, but recent experiments suggest that it doesn’t take a lot of extra traffic to trigger TCP queue oscillation.

In Tuvalu, we saw once again a combination of low link utilisation and high packet loss, and again coding allowed us to obtain higher data rates. In fact, in some cases, conventional TCP fared so badly that only coded TCP allowed us to complete the test downloads.

Taking stock

Clearly, TCP queue oscillation plays a significant role in underperforming links. This came somewhat as a surprise given that TCP queue oscillation as a phenomenon has been known since people first sent TCP traffic over satellite links. Moreover, it had been considered solved. Clearly, TCP stacks had evolved to cope with this, right?

Well, yes and no. The answer really lies in the history of satellite Internet. Firstly, early satellite connections in the 1980s and early 90s were dedicated point-to-point connections, which generally carried few parallel TCP sessions for very few hosts. In Rarotonga, we see queue oscillation only at times when there are over 2000 parallel inbound TCP flows.

Moreover, the networks connected at either end were generally not that fast at the time (10 Mbps Ethernet was considered state of the art back then), so the satellite links were less of a bottleneck than they are today: If you’re having to pare down from 1 Gbps to a couple of hundred Mbps or even just a few Mbps, there are quite a few more Mbps that you potentially have to drop.

In the 1990s, the Internet became a commodity and entire countries connected via satellite. However, in many cases (such as NZ), hundreds of thousands of users made for business cases that allowed for satellite capacities in the order of the capacity of the networks connected at either end. This more or less removed the bottleneck effect. No bottleneck, no TCP queue oscillation.

This leaves the islands: If the demand on an island falls into a bracket where it can only afford satellite capacity that represents a bottleneck, but the demand is large enough to generate a significant number of parallel TCP sessions, then TCP queue oscillation rears its ugly head. Add cruise ships, large drilling platforms and remote communities to the list.

So we know that coding can make individual TCP connections run faster when there’s TCP queue oscillation. But what about other techniques? Satellite people love performance-enhancing proxies (PEP), add-ons to satellite connections that – generally speaking – get the sending satellite gateway to issue “preemptive” ACKs to the TCP sender. Some PEPs fully break the connection between the two endpoints into multiple connections and even run high-latency variants of TCP across the satellite link itself. However, network people are wary of PEPs as they break the cherished end-to-end principle as well as a number of protocols. There’s also very little known about how PEPs scale with large numbers of parallel sessions – in fact, some of the literature suggests that they may not scale well at all. In fact, we have yet to encounter a PEP in the field in the Pacific that will actually reveal itself as such. It’s far more common to encounter WAN accelerators – devices built primarily with links between data centres in metropolitan area networks in mind.

Péter Vingelmann, yours truly and Janus Heide (Steinwurf) during a visit to ESA’s ESTEC in Noordwijk. ESA suggested that PEPs should work just as well – but would they?

Moreover, so far we’ve only coded individual connections, but they still had to share their journey across the satellite link with conventional TCP under queue oscillation conditions. We don’t quite know yet how coding will fare if we coded the traffic for an entire island. We think that the software will scale, and having fewer errors appear at the senders should dampen the queue oscillation, which should in theory allow us to lower the coding overhead. That said, trying this out requires a change in network topology on the island and/or across the satellite link, as the island clients can’t be in the same IP subnet as the satellite gateway on the island. This is something we can’t try easily on a production system.

So, how about simulation?