Building a satellite simulator

When we first went to the islands, we also tried to simulate what was happening on the links using a software network simulator. We knew from our measurements that packet loss was not an issue during off-peak times, so we knew the space segment was working properly in all cases we had looked at. This led us to model the satellite link as a lossless, high latency link with a bandwidth constraint.

Our first stab was to look at simulation in software alone. This confirmed that links with a significant number of longish parallel downloads would oscillate, but it also became apparent pretty quickly that this approach had its limits:

- Most sessions on real links don’t carry a lot of data – in fact, long downloads of more than a few kB are comparatively rare. Instead, real links carry a huge number of sessions over relatively short time periods. Many of them are very small and short and don’t get to experience queue oscillation in full: by the time the sender gets the first ACK, the session data has all been transferred. However, they contribute to the congestion at the input queue, of course, and are affected by packet drops when the queue overflows. Together with the few larger volume sessions, they still add up to large number of concurrent TCP sessions. If we want to simulate this, we need to generate small sessions over a long period of time in order to allow large volume sessions to get out of TCP slow start mode. In a software-based simulator, generating this large number of sessions represents a huge computational load. Real-time simulations were out of the question.

- Software-based simulators inherently process network nodes and events at network nodes sequentially. In real networks, things happen in parallel, with jittery clocks running at wildly different rates. So it’s a lot harder to replicate the chaotic traffic mix seen in real networks.

- Any software-based simulator faces a basic conundrum: It can leverage networking functionality already present on the system that the simulator runs on, e.g., the local TCP stack. This almost inevitably involves use of the local clock for timeouts etc. If the simulation is fast enough to meet real-time standards, this is of course fine, and the use of real components makes the results more realistic. If we can’t simulate fast enough, however, we can get erroneous results. Alternatively, we can simulate everything to the simulator’s own timebase, but then there is the effort of replication of functionality – and the question as to whether the replication is realistic.

So we resolved that the simulation needed to be done, at least in large parts, in hardware. Having real computers act as clients, servers and peers means we can work mostly with real network componentry. This leaves software to simulate those bits for which hardware is prohibitively expensive – in our case that’s of course mainly the satellite link itself.

It so happened that the University of Auckland’s satellite TV receiving station was being decommissioned at the time, and we managed to inherit two identical 19″ racks, seven feet tall. Once re-homed in our lab, all we had to do was find the hardware to fill them with

Lei Qian in front of the racks during an early stage of construction in mid-February 2016. A lot of parts have moved around since, and space in some of the racks is now at a premium!

The main challenge in any simulation of this kind of scenario is to simulate the inbound link into the island – it generally carries the bulk of the traffic as servers sit mostly somewhere off-island and clients sit by and large on the island. The standard configuration in the Pacific is to provide capacity on the inbound link that is four times the capacity of the outbound link.

In our case, the clients’ main job is to connect to the servers and receive data from the servers. Because our clients don’t really have users that want to watch videos or download software, we don’t really need them to do much beyond the receiving. They don’t even need to store the received data anywhere on disk (so disk I/O is never an issue). All they need to record is how much data they received, and when. This isn’t a particularly computing-intensive task, and even small machines such as Raspberry Pis and mini PCs can happily support a significant number of such clients in software. So we’ve filled one of the racks with these sorts of clients. This is the “island” rack.

The other rack is called the “world” rack and houses the simulated satellite link and the “servers of the world”. We realised pretty quickly that a clean approach would see us use a dedicated machine for the satellite link itself (I’ll drop the word “simulated” from hereon), and one dedicated machine at either end of the link for network coding, PEPing, and measurements. When we don’t code or PEP, these machines simply act as plain vanilla routers and forward the traffic to and from the link.

The next question was where we’d get the hardware from.

For the world side, Nevil Brownlee already had a small number of Super Micro servers which he was happy to let us use as part of the project. Thanks to a leftover balance in one of Brian Carpenter’s research accounts, we were able to buy a few more, plus ten Intel NUCs. Proceeds from a conference bought screens, keyboards, mice, some cables and switches. The end-of-financial-year mop-up 2015 bought us our first dozen Raspberry Pis (model 2B). Peter Abt, a volunteer from Germany, set the NUCs and Pis up.

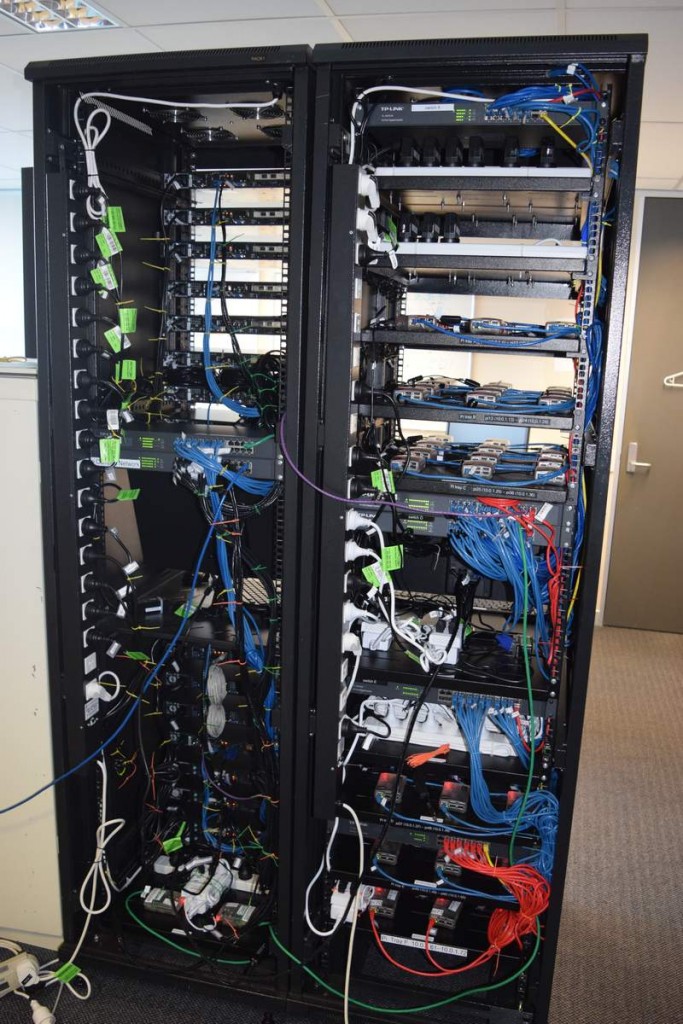

All bits in place – the simulator at the end of June 2016: The left rack simulates the island clients, with 10 Intel NUCs (top shelves) and 84 Raspberry Pis (red lights). The right rack houses the Super Micro servers which simulate satellite link, coding equipment, and “world” servers. Two consoles allow us to work locally with the machines.

We also applied to Internet NZ again, whose grant paid for a lot more Pis (the new Model 3B with quad-core processors) and switches and another server, plus a lot of small parts and a few more rack shelves. A departmental capex grant saw us add four more Super Micro servers to the “world” fleet.

So we’re now up to 96 Raspberry Pis and 10 Intel NUCs on the “island” side of the simulator, four Super Micros for “sat link” and titrators /encoders/ decoders/PEPs either side of the link, plus fourteen “world” servers that supply the data.

We’ve also gang-pressed a PC to serve as the central data storage back end, and there are two special purpose “command and control Pis” either side of

the “link” to assist us in tasks we don’t want to do on heavily loaded machines during an experiment.

About a dozen Gpbs Ethernet switches tie the whole assembly together.

In order to let us work with the simulator from the outside, we have a number of access options: All Super Micros are connected to the university network, which gives us worldwide access via VPN or login servers. The two special purposes Pis and the storage server are also connected to the university network.

This gives us the ability to monitor processes on the link or the servers without having to use the network whose operation we want to observe, i.e., the traffic that our activities cause doesn’t interfere with the experiment traffic.

One exception to this are the Pis and NUCs, which don’t have a standard connection to the university network. They can however be co-opted in through a number of USB dongles.

All machines are set up to operate headless (without keyboard, mouse or screen), but we have two consoles in order to have local access, e.g., for network configuration purposes.

Power load is always a consideration with a facility like this. Even though the machines don’t consume a lot of power each, in combination, they pull a bit. While it’s still comfortably within the limits of a power socket, that power eventually turns to heat, and Lei reports that the room is pretty cosy now that spring is underway!

The building of the simulator more or less coincided with the electrical safety inspection that the university conducts every few years. The poor sparkies that got tasked with the job expected at best a few cables and power supplies in each room. I’m not sure what they thought when they saw the simulator, but I suspect we had more bits for them to test than some departments. The sparkies now greet me whenever they see me on campus!

The rear view shot of the simulator shows the significant amount of cabling involved, network, mains and low voltage!

Five 24 port Gigabit Ethernet switches sit at the back of the island rack. Another two tie the “world servers” and satellite link together, one as part of the simulator network, the other to give the servers direct Internet access as well. Lei has skillfully hidden a lot of the cabling so it’s barey visible from the front. By the way: That violet cable goes to the “satellite link” over in the “world” rack, the green cables at the bottom are for the command & control Pis, visible at the bottom of the world rack on the left.

Update 2017: Additional hardware for the simulator

In mid-2017, we received around NZ$45k in additional hardware in the form of 15 additional Super Micro servers, four Gigabit Ethernet copper taps (a fifth one made its way to us from Nevil Brownlee’s shelf), a number of additional switches and enough cabling to hook everything up, as well as an extra 19″ rack to expand into. These have now all arrived and are being configured. Among others, the extra hardware allows us to separate traffic capture, encoding/decoding and PEP functionality while beefing up the diversity of our world server traffic.

Update 2018: Hardware upgrade and storage servers

After running out of storage for our experiments, we now have two storage servers with raided 96 TB of storage each. This should last us for a few years.

Update 2020: More hardware still!

In 2019, we were once again funded by ISIF Asia via APNIC (thanks folks!) in order to investigate whether we could prevent coded traffic from clogging up the link to its own detriment, which is what happens in all-of-island coding at the moment. Along with the 2019 CAPEX round, we were able to replace a number of older SuperMicros but most of all now have two titrator machines in the satellite link chain. They inspect coded traffic and prevent surplus coding redundancy from reaching the link.