Baselines are experiments undertaken to establish how a system performs without, or prior to modification. In our case, we take baselines in order to see, for example:

- What goodput can we achieve without coding on a given link under certain load conditions and queue sizes?

- How does the input queue to the satellite link behave during such base cases?

- How long would a certain size download take in such a base case?

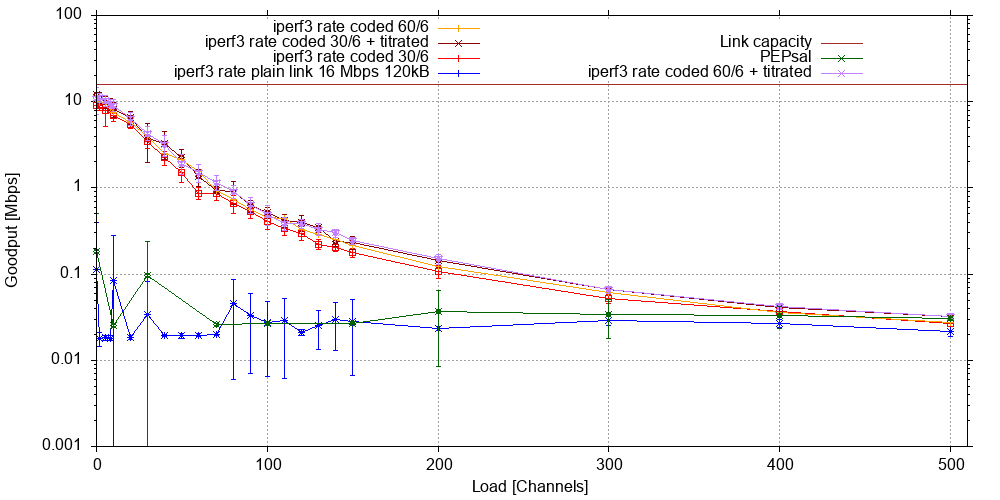

This page shows the results of a number of such baseline experiments. The plots shown here are only a small selection of the data collectedFig. 1

Fig. 1 above shows the overall goodput across a simulated 16 Mb/s GEO link from the given number of channels and a 300 s iperf3 TCP transfer over a 600 second experiment using the new 2021 userland link emulation, for a 120 kB input queue capacity, which is less than the more conventional 1000-4000 kB normally deployed on this type of link but is more in line with the recommendations by Appenzeller et. al [1]. Conclusion: PEPsal (green curve) does not give an overall gain over a plain link (blue) under load here, but coding (yellow and orange) does – with titration (purple curves) adding and extra 1 Mb/s or so at the point where traffic saturates the link.

Fig. 2 looks at the goodput achieved by the 300 second iperf3 transfer on its own. On satellite links, large transfers usually don’t fare well due to TCP queue oscillation-induced packet loss that is not captured by the sender in a timely manner. This is evident here for both the plain link and the PEPsal-enhanced link. Note that PEPsal achieves significant gain over baseline for very low background traffic loads, but is dwarfed by the coded results, which are almost an order of magnitude above baseline at the point where link saturation sets in. This shows that the overall coding gain is not achieved at the expense of a particular size flow: Coding floats all boats!

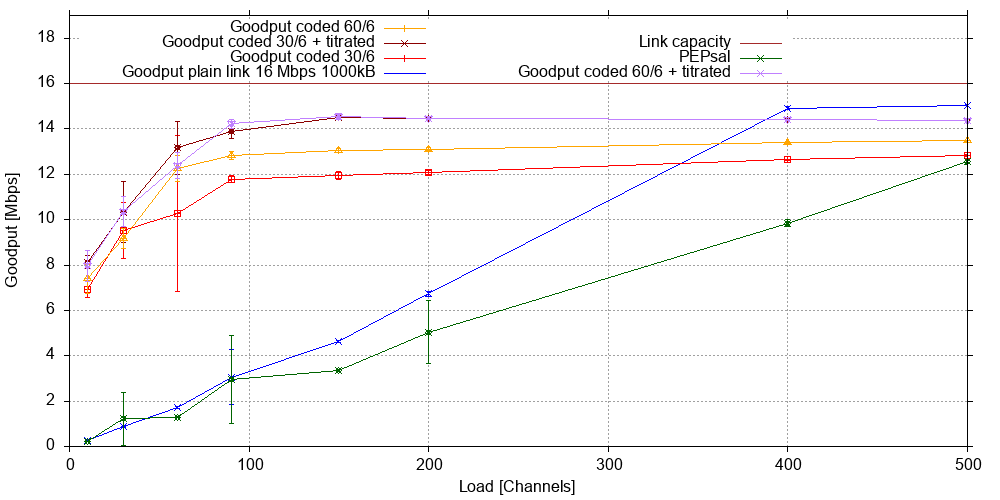

Fig. 3 shows the same arrangement as Fig. 1, but for a 1000 kB (1 MB) input queue. Contrary to conventional wisdom, we don’t observe an overall goodput gain for the plain link here, giving further credence to Appenzeller’s observations. Coded traffic does benefit from the lager buffer, however, albeit a the expense of a higher maximum RTT, which is bad for real-time protocols that may be running alongside.

Fig. 4 shows the 1000 kB version of Fig. 2. Note that PEPsal has a clear edge over the plain link in this classic configuration, but coding outperforms both PEPsal and baseline over the entire load range.

[1] G. Appenzeller, I. Keslassy, N. McKeown, “Sizing Router Buffers,” ACM SIGCOMM Comput. Commun. Rev., 34 (4), Aug. 2004, pp. 281–292