The simulator essentially consists of four networks:

The simulator essentially consists of four networks:

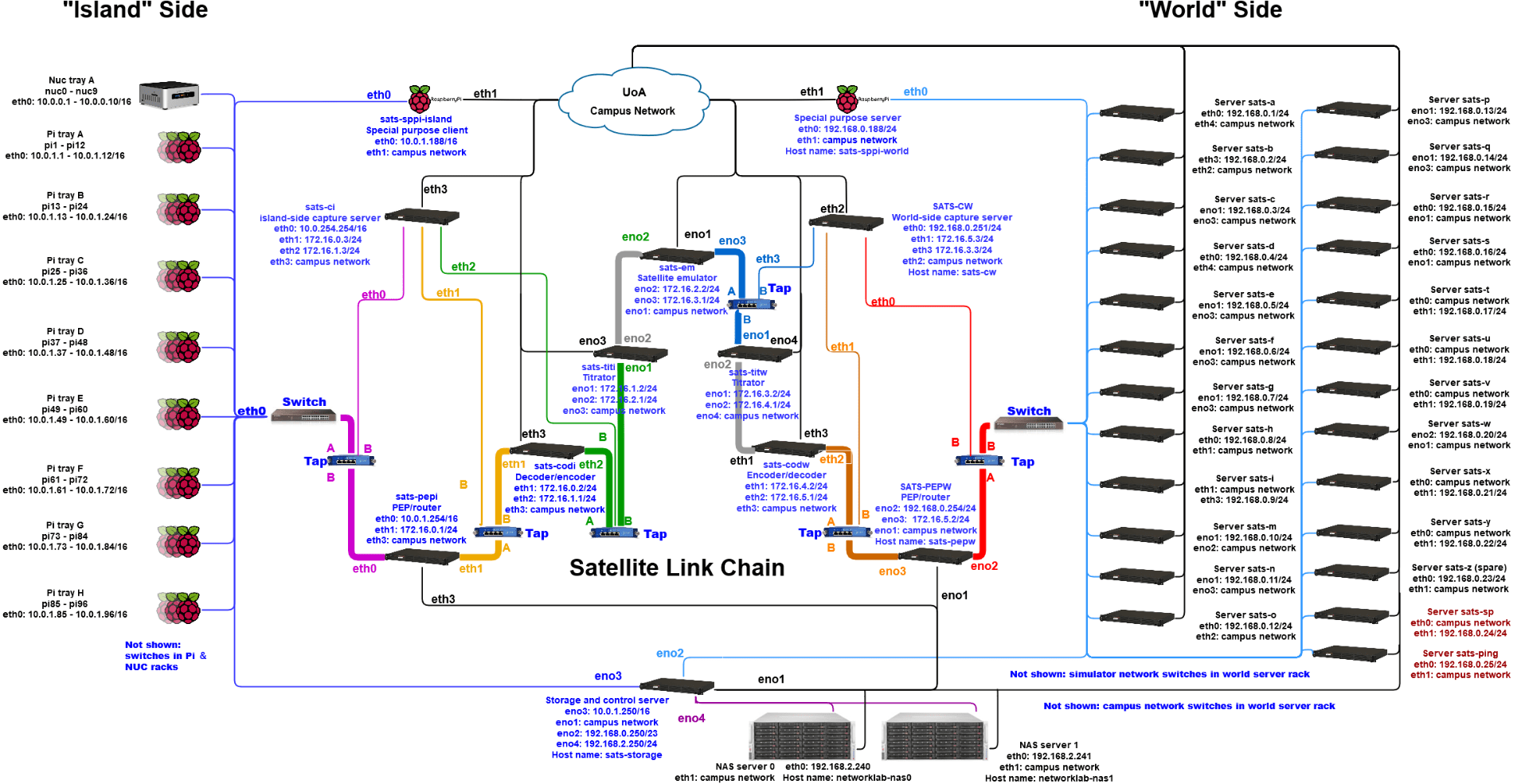

- The simulator “world” network (light blue), which ties the “world servers” A, B, C, D, E, F, G, H, I, M, N, O, P, Q, R, S, T, U, V, W, X, Y, and Z to the “world-facing” router/PEP machine sats-pepw on 192.168.0.254, the storage and command & control machine sats-storage on 192.168.0.190, the special purpose machines sats-sppi-world (a Raspberry Pi) on 192.168.0.188 and sats-sp (a Super Micro). The world servers implement their own ingress/egress delays to simulate a terrestrial packet delay distribution.

- The simulator “island” network (dark blue), which ties the “island clients” (Pis and NUCs) to the “island-facing” router/PEP machine sats-pepi, the storage and command & control machine sats-storage on 10.0.1.190, and the special purpose machine sats-sppi-island on 10.0.1.188 (also a Raspberry Pi).

- The “sat link chain” (thick multi-coloured network) between servers sats-pepi, sats-codi, sats-em (the actual satellite emulator that implements latencies and bandwidth constraints for the satellite itself), sats-codw and sats-pepw. The two machines sats-codw and sats-codi are the network coding encoders and decoders. As of early 2020, we have also added two new machines to the sat link chain, the titrators sats-titi and sats-titw.

- The capture network (thin multi-coloured network), which comprises servers sats-cw and sats-ci, and at present five copper taps that capture traffic heading to the island side.

- The campus network (black), which allows external access for configuration, software downloads/upgrades, experiment monitoring and control. Having this network allows us to interact with the entire world side and the core “sat link” machines without having to send that traffic via the network we’re experimenting with. Ideally, we’d love to do the same for our Pis and NUCs, but they only have one Ethernet interface, and the thought of a myriad of dongles in the already fairly tightly packed racks does not appeal!

The storage server sats-storage is external to the racks. It also acts as the “command & control” machine: This is where the scripts run that orchestrate the experiments and distribute tasks to the other components of the simulator.

In an experiment, the storage server starts the world servers, configures the link, and starts packet capture on either side of the link via the green network. It then starts the Pis and NUCs on the dark blue “island” network, which send TCP connection requests to the servers on the light blue “world” network. The IP packets carrying these connection requests travel via the simulated satellite link (the thick coloured network) to the “world servers”, which send data back via the reverse path. Each NUC and each Pi maintains a certain number of active sockets for these connections. This number is configurable, which allows us to control the demand for downloads from the world side incrementally.

The special purpose Pis on either side of the “satellite link” act as signaling devices: Because we’re capturing on two machines with different clocks, and the same packets pass through the various components of the simulated link at different times, we need to synchronise the packet traces taken on either side. This is difficult to do based on packets between island clients and world servers, as these are not unique. Address and port combinations can and do occasionally repeat across connections. However, the special purpose machines don’t take part in the cacophony that is the demand generation, so we use them to exchange a ping at the start and another ping at the end of each experiment. These pings are seen in all packet traces and give us a common reference across all of them. That is, we know: If a packet is seen in a trace between the two ping events, it’s part of our experiment. If it’s missing on the far side of the link, it was dropped. Any packets before/after the start/stop ping may be missed due to late onset or termination of capture rather than as a result of a drop.

The world-side special purpose server has two jobs in a measurement: The first is to send a continuous series of fast small pings to NUC4 on the island side. Small pings can usually slip into a queue at any time even when larger packets are already being rejected. We use this to get an estimate of queue sojourn time. The second job is to send a large TCP transfer to NUC4 during an experiment – so we can see how a standardised transfer fares in a particular scenario (i.e., how long it takes).